Your AI Training Cluster is Idle. The Network is to Blame.

September 30, 2025 | Pavan Chaudhari

You’ve invested millions in GPUs — but they may spend half their time just waiting.

Here's why:

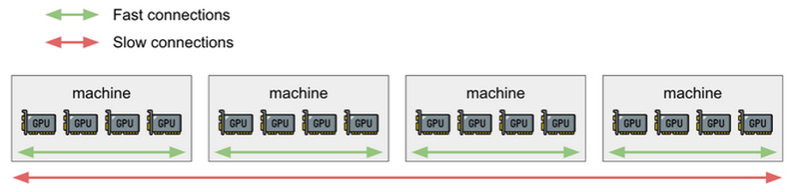

At the heart of modern LLM training (like GPT-4) lies a critical process: Distributed Data-Parallel Training. The model and data are sharded across thousands of GPUs.

⚙️ After each batch of data is processed, every single GPU must stop and synchronize its gradients (model updates) with every other GPU. This requires an "all-to-all" communication storm across the entire cluster.

❌ The Straggler Problem: This is where the bottleneck strikes. If just one GPU is slow—due to network latency, packet loss, or congestion—every other expensive processor must wait for it to catch up.

The Impact is Staggering:

📉 Even 1% packet loss can crater training efficiency by over 50%. What should be a 10-day training job stretches to 20+ days. This isn't a technical hiccup; it's a massive financial drain on compute resources and a dramatic slowdown in innovation velocity.

🛠️ Building the "Lossless Nervous System" for AI

The solution isn't more GPUs; it's a smarter, faster, and more robust network fabric. Here’s how we solve it:

✅ IP-Clos Fabric (The Highway System):

Forget oversubscribed tree networks. A leaf-spine Clos fabric is non-blocking, providing dedicated, high-speed lanes for the massive "east-west" traffic between GPU racks. No more traffic jams.

✅ RoCEv2 (The Teleporter):

RDMA over Converged Ethernet is a game-changer. It allows GPUs to read and write data directly to each other's memory over the network, bypassing the CPU and OS kernel. This slashes latency and frees up CPU cycles for actual computation.

✅ PFC + ECN (The Air Traffic Control):

To make RoCE work, we need a lossless fabric.

PFC (Priority Flow Control): A targeted "pause" button that prevents buffer overflows and the catastrophic packet drops that kill RDMA performance.

ECN (Explicit Congestion Notification): A smart, early-warning system that tells traffic sources to gently slow down before congestion happens, avoiding the need for brute-force pauses.

✅ SRv6 & EVPN (The Autonomous Control Plane):

- SRv6: Makes the network programmable. It embeds routing instructions into the packet header, enabling seamless traffic steering and automation for dynamic AI workloads.

- EVPN: Provides a robust control plane for managing layer 2 and layer 3 connectivity at scale, essential for secure, multi-tenant AI clusters.

In the race for AI supremacy, the winner won't be the team with the most GPUs, but the team that can most efficiently connect them. The network is no longer plumbing; it is the central nervous system of artificial intelligence.

Categories

5G

ACE

AI

ArcEdge

ArcIQ

ARCOS

ARRCUS

CLOUD

datacenters

edge

FlexAlgo

hybrid

Internet

INVESTING

IPV4

IPV6

MCN

ML

multicloud

Multicloud

MUP

NETWORKING

NETWORKING INDUSTRY

Routing

SRV6

uSID